From Data Bottleneck to Design Breakthrough: How 'UNO' is Reshaping AI Image Generation

Why Traditional Image Generators Break Down in the Real World

Despite recent strides in generative AI, there’s one glaring limitation: consistency across subjects and scenes. Ask a model to create a cat on a skateboard? Easy. Ask it to maintain that same cat’s features, pose, and outfit across five new contexts? That’s where things fall apart.

This breakdown stems from the industry’s dependence on scarce, high-quality paired datasets. Without them, models can’t learn to generate visually consistent outputs with fine-grained control—especially for multi-subject scenes or user-specific customizations. This is where most systems fail to scale, especially in commercial deployments.

The Breakthrough Idea: Let the Model Improve Its Own Training Data

The research team behind “Less-to-More Generalization” flips the script with a clever idea: what if the model could generate its own data—then learn from it?

Their proposed solution is a “model-data co-evolution” pipeline, where an initial model starts with simple single-subject scenes, generates its own training data, and gradually moves toward more complex multi-subject setups. With each iteration, both the model’s precision and the data quality improve—creating a feedback loop of escalating capability.

This is not just a tweak to training—it’s a new paradigm for building generative systems in data-starved environments.

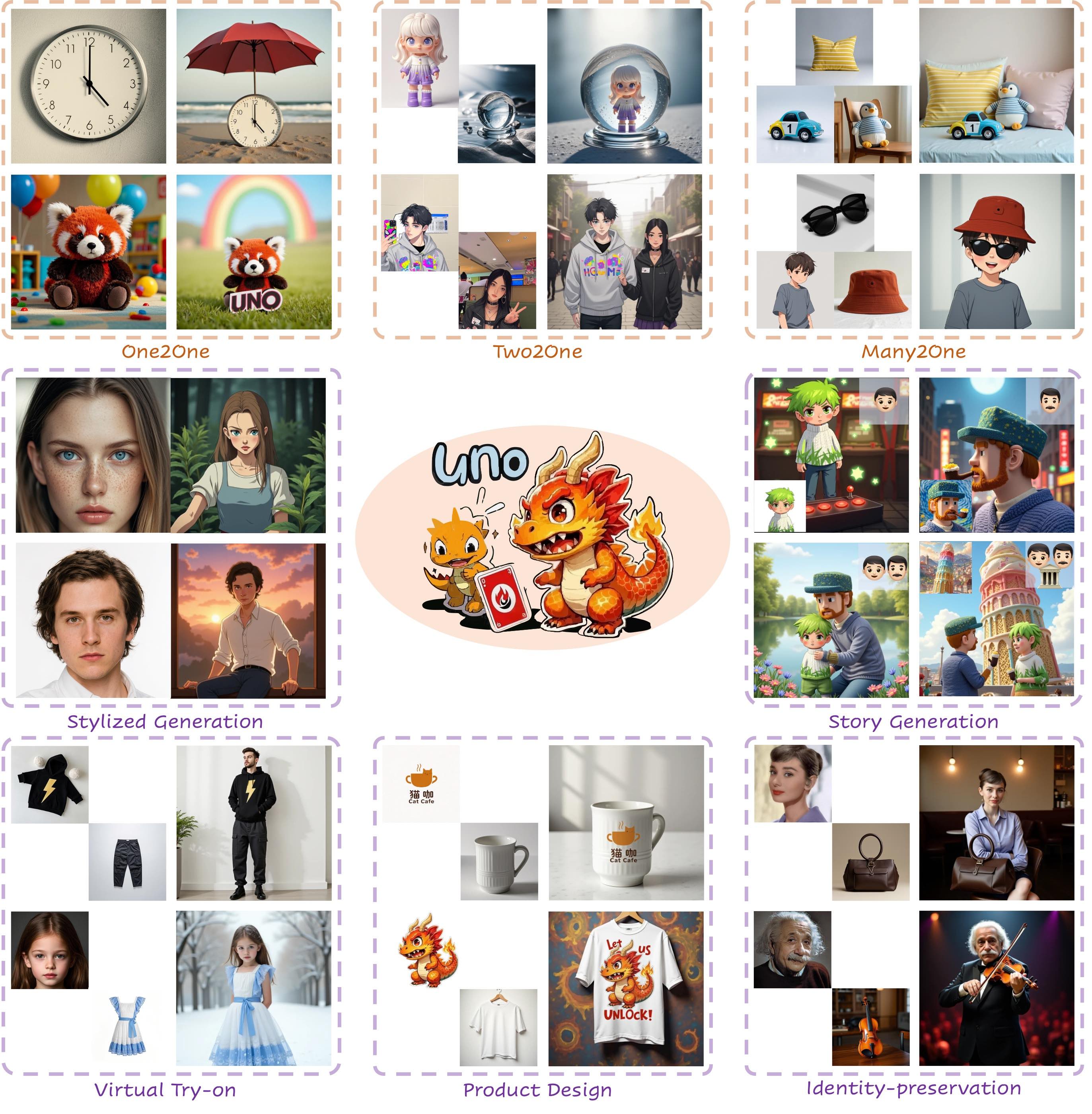

Meet UNO: The AI Model Built for High-Fidelity Customization

UNO (short for Universal Customization Network) is the technical engine behind this paradigm shift. It’s a custom-designed architecture based on diffusion transformers and optimized for visual control, text alignment, and compositional consistency.

🧠 Train Easy, Scale Hard: The Two-Stage Learning Strategy

UNO first trains on single-subject scenes to build a stable base. Only after mastering simple tasks does it tackle multi-subject compositions. This “simple-to-complex” strategy prevents the system from collapsing under cognitive overload too early in training—a problem that has plagued other large-scale visual models.

🧪 Build Data from Scratch, Then Filter It Like a Pro

UNO uses a synthetic data curation pipeline, where it generates its own high-resolution, subject-paired images using diffusion models. But not all self-generated data is equal. A smart filtering mechanism powered by vision-language models weeds out inconsistencies and ensures that only the best training pairs make the cut.

📐 UnoPE: A Spatial Solution to Attribute Confusion

Multi-subject scenes often result in mismatched attributes or blended identities. UNO solves this with **Universal Rotary Position Embedding **—a method that smartly balances layout information from text prompts with the visual features of reference images. The result? Clean compositions where each subject retains their identity.

How UNO Performs: State-of-the-Art, Inside and Out

UNO isn’t just a technical novelty—it backs its claims with dominant performance on real-world benchmarks.

- Outperformed leading models on DreamBench, with top-tier DINO and CLIP-I scores in both single- and multi-subject image generation tasks.

- User studies consistently preferred UNO’s outputs across metrics like subject fidelity, visual appeal, and prompt adherence.

- Ablation tests prove that each component—data generation, UnoPE, and the cross-modal strategy—adds measurable value to the system’s capabilities.

For businesses seeking deployable AI solutions, this kind of quantitative rigor matters. It signals readiness for commercial integration—not just lab demos.

6 Markets That Can Immediately Profit from UNO's Capabilities

UNO’s practical applications span multiple high-growth industries. Here’s where it can deliver ROI today:

🛍 E-Commerce and Virtual Try-On

Online retailers can use UNO to let customers try on outfits or accessories—without photoshoots or manual edits. Consistent subject preservation ensures personalized results without losing identity.

🎨 Design and Creative Agencies

From digital characters to ad visuals, creative teams can leverage UNO for rapid prototyping and brand-consistent campaigns, while minimizing repetitive manual work.

🚗 Automotive and Industrial Product Visualization

UNO allows product teams to render concept visuals with precise feature control. This cuts time from ideation to prototype and lowers reliance on photorealistic mockups.

📱 Personalized Content Platforms

Apps offering personalized avatars, character-based storytelling, or custom media generation can use UNO to scale content generation while keeping it user-specific.

🧥 Fashion Tech and DTC Startups

Custom fashion and direct-to-consumer platforms can use UNO to simulate garment variations across models, offering personalized lookbooks and real-time customization.

🎬 Media and Entertainment

From animated films to interactive content, UNO’s ability to maintain character consistency across scenes makes it ideal for virtual productions and storyboarding.

What to Watch: Three Risks Worth Noting

Every breakthrough has trade-offs. Investors and enterprise teams should weigh these carefully:

1. Heavy Compute Requirements

Training UNO at scale still demands substantial GPU resources, making initial adoption costly for smaller teams. Cloud-based pipelines may mitigate this—but at a price.

2. Bias in the Synthetic Feedback Loop

UNO relies on existing models to create its synthetic data. If those base models contain latent biases, they may get amplified through self-training. This raises ethical and accuracy concerns, especially in applications involving human likeness or cultural diversity.

3. Domain-Specific Limitations

UNO excels in generic and consumer-facing imagery. But its effectiveness in highly regulated or niche domains—like medical imaging or engineering blueprints—remains to be validated. Customization here would require domain-specific training regimes.

A Blueprint for Scalable, Controllable Generative AI

The UNO architecture and co-evolution strategy are not just research artifacts—they are blueprints for the next generation of scalable, controllable AI systems. By letting models iteratively improve their own training environment, Wu and his team have created a path forward for AI applications that demand precision, personalization, and performance.

For business leaders, this opens up a powerful proposition: custom design at the speed of code.